The research hypotheses and technological developments are related to:

Central to this work is the building of a new guide and the evaluation and understanding of user experience. On the one hand, the IMG provides an essential tool for enriching access to 2D artworks for blind and partially blind people within museums and cultural heritage locations. On the other hand, it provides a multisensory experience that enrich and deepen experience of 2D artworks in both, blind, partially blind and non-blind people.

The IMG seeks to provide functional equivalence (and not sensory equivalence), based on the principle that enriched mental representations of artworks will emerge through concurrent sensory actions, motor actions, and verbal description. Thus, as an inclusive tool, the ad hoc technology may contribute to the emergence of new aesthetic impressions which can be shared with others, irrespective of visual experience.

Working with blind and partially blind researchers and end-users will allow us to better understand the mental process that leads to the emergence of aesthetic impressions of artworks in blind, partially blind and non-blind people.

The IMG project considers sensory stimulations such as visual, audio and tactile, the motor actions such as haptic combined with cognitive understanding extracted from speech (audio description).

The sensorimotor theory of perception will provide a framework for how these new ways of experiencing art can be reinforced (assisted) by our perceptions and associated actions. In addition, within the concept of « blindness gain ». This project will result the creation of novel ways of experiencing 2D art works, for blind, partially blind and non-blind users, in such way that rethink traditionally « sighted » ways of accessing art. It does so by combining the art of description (specifically describing visual arts in non-visual ways) as a tool to enhance access for all [Ear 17] with haptic and auditory experience (with or without vision).

Moreover, the results of our preliminary experimental evaluations with end-users (lead users, blind and partially blind) of tactile representations of basic 2D data displayed on existing touch stimulation devices [Riv 19, Rom 18, Gay 18, Cho 18] have confirmed, that:

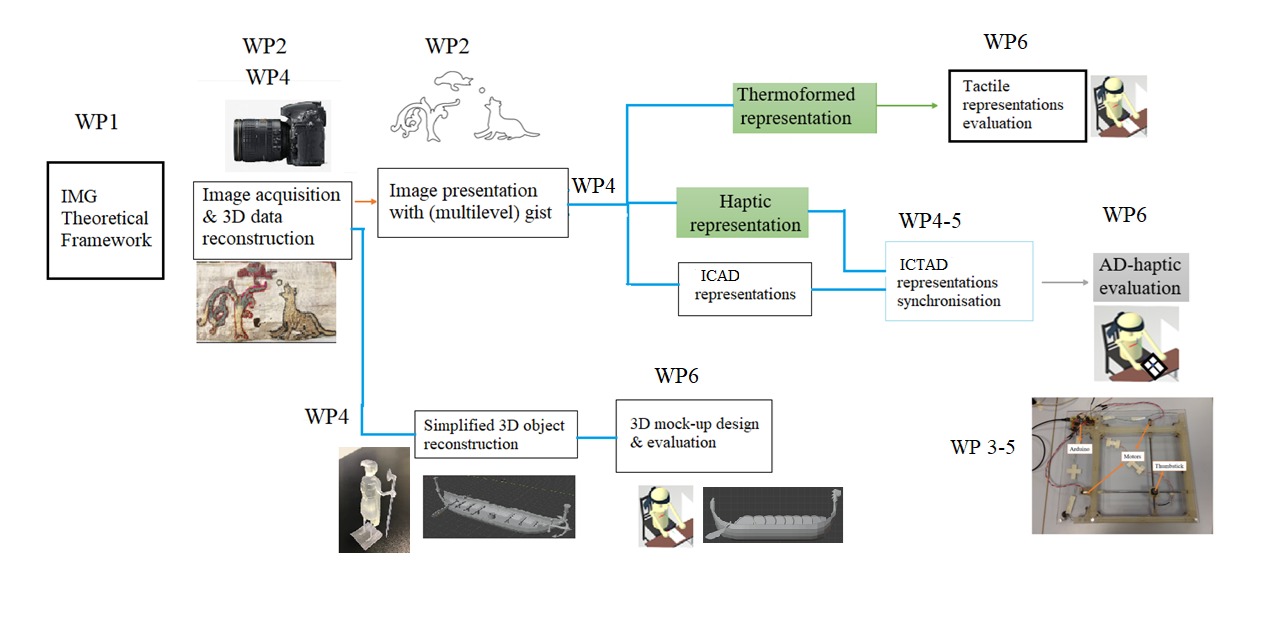

The IMG project and its tangible outcome, the IMG device (cf. figure 2 and figure 3), aims to prototype a new multimodal digital programmable, interactive portable museum guiding tool, suitable for to any level of sight (totally blind to fully sighted). This tool will build, from images acquired with ad hoc vision system, the multimodal representation of the imaged objects/scenes (audio-tactile map, audio-tactile representation of an artwork, and 3D reconstructed objects - mockups).

Figure 2

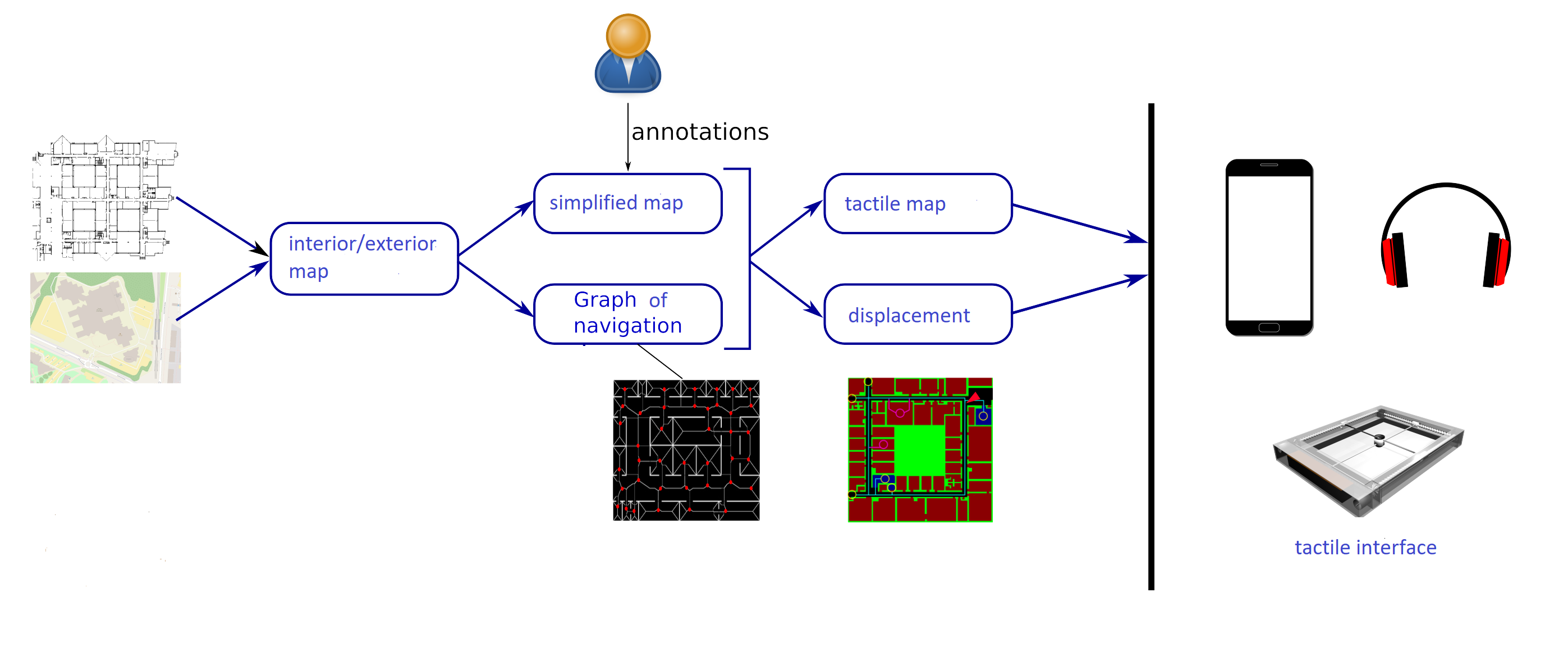

Figure 3

The IMG system is made up of cooperating hardware and software. Its hardware elements are the following : a screen (where an image is displayed); a mechatronic case adaptable to the size of any screen and named F2T (force feedback tablet), a vision system and (bone-conduction) headphones (optional). One (or more) mobile joystick(s) and a set of keys on the F2T will allow museum visitors to interact with the screen (creating visual-tactile-haptic images).

Several basic applications (software) will be developed to allow the creation and addition of tactile maps (indoor and outdoor), to provide locating and tracking tools and to create haptic and interactive artwork representation combined with audio-description.

It will be possible to understand through touch (without seeing), such multimodal representations thank to image processing and computer vision techniques. Specific image segmentation algorithms will be designed in order to simplify images of the artworks, extract the most salient features, identified in collaboration with museum curators and VIP users, and transpose them onto the F2T.

A feeling of the artwork’s depth and texture will be provided to the VIPs by creating high-resolution 3D-printed mock-ups. Such 3D-models will be automatically created by combining point clouds estimated using LIDAR-like systems with high-precision surface orientation estimates obtained by turning an existing Reflectance Transform Imaging (RTI) system into a photometric stereo-based 3D reconstruction platform. Finally, a basic programming library will be built for open-source applications design using high level programming languages.

Journals

Publications

International Conferences

French National Conferences

Presentations

Workshop

MOOC

Scientific Reports

Film of our tablet F2T